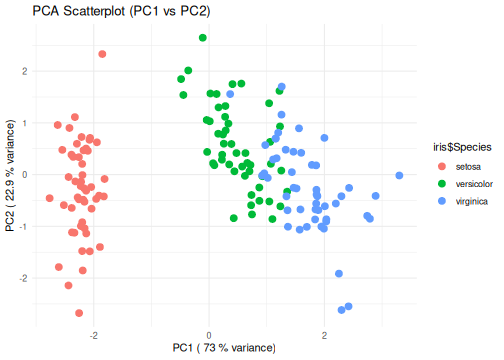

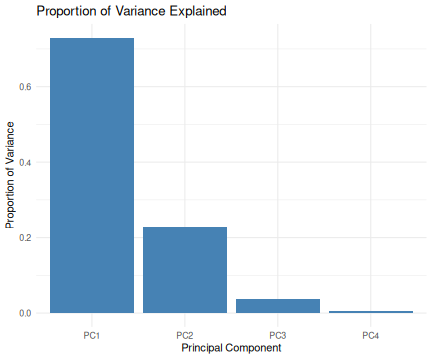

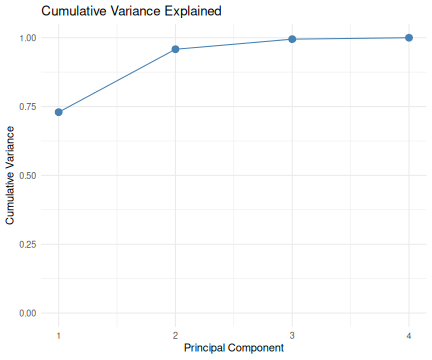

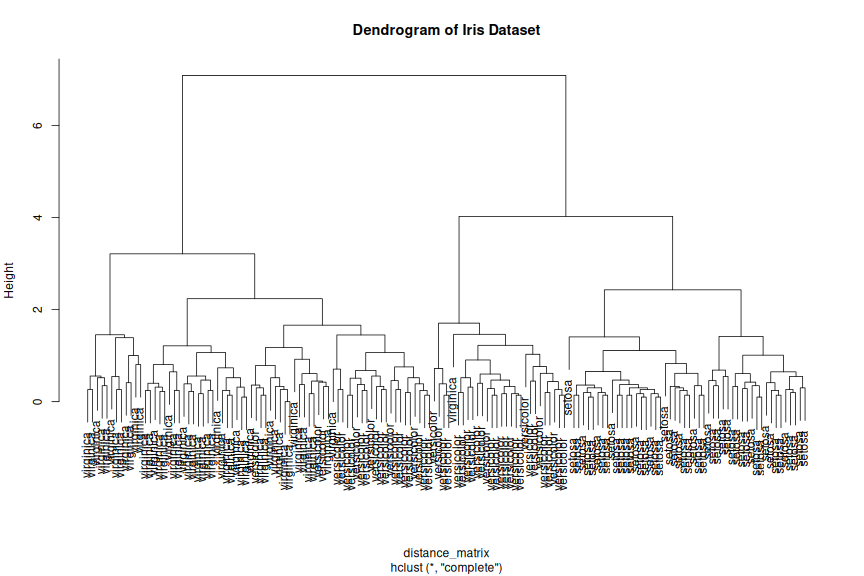

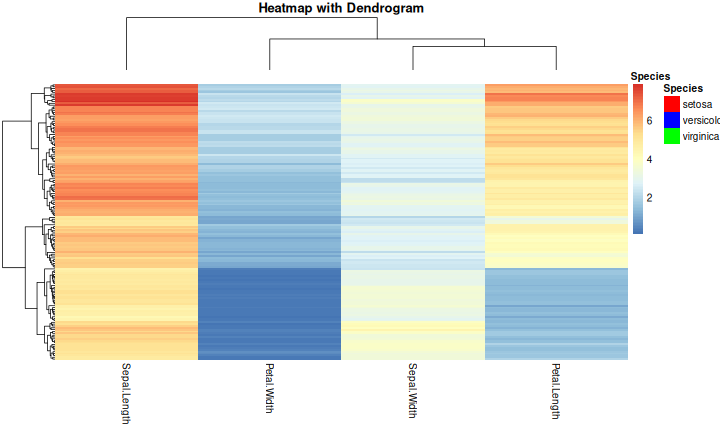

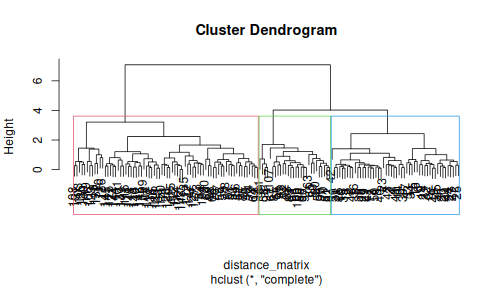

class: center, middle, inverse, title-slide .title[ # R-Basics Day 2 ] .author[ ### Bioinfomatics Core Facility ] .institute[ ### CECAD ] .date[ ### 2024/07/15 (updated: 2024-09-25) ] --- <style type="text/css"> .panelset { --panel-tab-foreground: grey; --panel-tab-active-foreground: #0051BA; --panel-tab-hover-foreground: #d22; --panel-tab-inactive-opacity: 0.5; --panel-tabs-border-bottom: #ddd; --panel-tab-font-family: Arial; } .footer { color: var(--footer_grey); font-size: 0.5em; position: absolute; bottom: 0; left: 0; bottom: 0; border-top: 1px solid var(--cecad_blue); padding: 1rem 64px 1rem 64px; } .remark-inline-code { font-family: 'Inconsolata', monospace; color: #515151; //background-color: rgba(217, 5, 2, 0.2); border-radius: 2px; /* Making border radius */ width: auto; /* Making auto-sizable width */ height: auto; /* Making auto-sizable height */ padding: 0px 2px 1px 2px; /*Making space around letters */ color: #818181; color: rgb(249, 38, 114); } </style> <style>.xe__progress-bar__container { top:0; opacity: 1; position:absolute; right:0; left: 0; } .xe__progress-bar { height: 0.25em; background-color: #0051BA; width: calc(var(--slide-current) / var(--slide-total) * 100%); } .remark-visible .xe__progress-bar { animation: xe__progress-bar__wipe 200ms forwards; animation-timing-function: cubic-bezier(.86,0,.07,1); } @keyframes xe__progress-bar__wipe { 0% { width: calc(var(--slide-previous) / var(--slide-total) * 100%); } 100% { width: calc(var(--slide-current) / var(--slide-total) * 100%); } }</style> # Slides & Code .right-column[ ### <svg aria-hidden="true" role="img" viewBox="0 0 496 512" style="height:1em;width:0.97em;vertical-align:-0.125em;margin-left:auto;margin-right:auto;font-size:inherit;fill:currentColor;overflow:visible;position:relative;"><path d="M165.9 397.4c0 2-2.3 3.6-5.2 3.6-3.3.3-5.6-1.3-5.6-3.6 0-2 2.3-3.6 5.2-3.6 3-.3 5.6 1.3 5.6 3.6zm-31.1-4.5c-.7 2 1.3 4.3 4.3 4.9 2.6 1 5.6 0 6.2-2s-1.3-4.3-4.3-5.2c-2.6-.7-5.5.3-6.2 2.3zm44.2-1.7c-2.9.7-4.9 2.6-4.6 4.9.3 2 2.9 3.3 5.9 2.6 2.9-.7 4.9-2.6 4.6-4.6-.3-1.9-3-3.2-5.9-2.9zM244.8 8C106.1 8 0 113.3 0 252c0 110.9 69.8 205.8 169.5 239.2 12.8 2.3 17.3-5.6 17.3-12.1 0-6.2-.3-40.4-.3-61.4 0 0-70 15-84.7-29.8 0 0-11.4-29.1-27.8-36.6 0 0-22.9-15.7 1.6-15.4 0 0 24.9 2 38.6 25.8 21.9 38.6 58.6 27.5 72.9 20.9 2.3-16 8.8-27.1 16-33.7-55.9-6.2-112.3-14.3-112.3-110.5 0-27.5 7.6-41.3 23.6-58.9-2.6-6.5-11.1-33.3 2.6-67.9 20.9-6.5 69 27 69 27 20-5.6 41.5-8.5 62.8-8.5s42.8 2.9 62.8 8.5c0 0 48.1-33.6 69-27 13.7 34.7 5.2 61.4 2.6 67.9 16 17.7 25.8 31.5 25.8 58.9 0 96.5-58.9 104.2-114.8 110.5 9.2 7.9 17 22.9 17 46.4 0 33.7-.3 75.4-.3 83.6 0 6.5 4.6 14.4 17.3 12.1C428.2 457.8 496 362.9 496 252 496 113.3 383.5 8 244.8 8zM97.2 352.9c-1.3 1-1 3.3.7 5.2 1.6 1.6 3.9 2.3 5.2 1 1.3-1 1-3.3-.7-5.2-1.6-1.6-3.9-2.3-5.2-1zm-10.8-8.1c-.7 1.3.3 2.9 2.3 3.9 1.6 1 3.6.7 4.3-.7.7-1.3-.3-2.9-2.3-3.9-2-.6-3.6-.3-4.3.7zm32.4 35.6c-1.6 1.3-1 4.3 1.3 6.2 2.3 2.3 5.2 2.6 6.5 1 1.3-1.3.7-4.3-1.3-6.2-2.2-2.3-5.2-2.6-6.5-1zm-11.4-14.7c-1.6 1-1.6 3.6 0 5.9 1.6 2.3 4.3 3.3 5.6 2.3 1.6-1.3 1.6-3.9 0-6.2-1.4-2.3-4-3.3-5.6-2z"/></svg> git repo [Basic_R_course_CGA](https://github.com/CECADBioinformaticsCoreFacility/Basic_R_course_CGA) `git clone https://github.com/CECADBioinformaticsCoreFacility/Basic_R_course_CGA.git` ### Slides Directly [https://cecadbioinformaticscorefacility.github.io/Basic_R_course_CGA/](https://cecadbioinformaticscorefacility.github.io/Basic_R_course_CGA/) ] .left-column[ <img src="Day2_R-Stats_files/figure-html/unnamed-chunk-3-1.png" style="display: block; margin: auto;" /> - [*p*] presenter view - [*o*] overview - [*f*] fullscreen - [*h*] help/more ] --- class: inverse, center, middle # Statistical Analysis in R --- class: inverse, center, middle # Session 1: Inferential Statistics - Part 1 --- # Chi-square test Chi-square test of independence tests whether there is a relationship between two categorical variables. The null and alternative hypotheses are: - _H_<sub>0</sub> : the variables are independent, there is no relationship between the two categorical variables. Knowing the value of one variable does not help to predict the value of the other variable - _H_<sub>1</sub> : the variables are dependent, there is a relationship between the two categorical variables. Knowing the value of one variable helps to predict the value of the other variable -- The Chi-square test of independence works by comparing the observed frequencies (so the frequencies observed in your sample) to the expected frequencies if there was no relationship between the two categorical variables (so the expected frequencies if the null hypothesis was true). -- ### assumptions - The observations are independent - Sample is large enough (Require a minimum of 5 expected counts in the contingency table) --- # Chi-square test in R .panelset[ .panel[.panel-name[Data] ``` r demo_data <- iris demo_data$size <- ifelse(demo_data$Sepal.Length < median(demo_data$Sepal.Length), "small", "big" ) table(demo_data$Species, demo_data$size) ``` ``` ## ## big small ## setosa 1 49 ## versicolor 29 21 ## virginica 47 3 ``` ] .panel[.panel-name[ggplot2] ``` r library(ggplot2) ggplot(demo_data) + aes(x = Species, fill = size) + geom_bar() ``` ] .panel[.panel-name[Plot] <img src="Day2_R-Stats_files/figure-html/Chi_square_Plot-1.png" width="40%" /> ] .panel[.panel-name[Chi-square] ``` r chisq.test(table(demo_data$Species, demo_data$size)) ``` ``` ## ## Pearson's Chi-squared test ## ## data: table(demo_data$Species, demo_data$size) ## X-squared = 86.035, df = 2, p-value < 2.2e-16 ``` ] .panel[.panel-name[summary] ``` r summary(table(demo_data$Species, demo_data$size)) ``` ``` ## Number of cases in table: 150 ## Number of factors: 2 ## Test for independence of all factors: ## Chisq = 86.03, df = 2, p-value = 2.079e-19 ``` ] .panel[.panel-name[Interpretation] From the output we see that the p-value is less than the significance level of 5%,so, we can reject the null hypothesis. In our context, rejecting the null hypothesis for the Chi-square test of independence means that there is a significant relationship between the two categorical variables (species and big or small petal length). Therefore, knowing the value of one variable helps to predict the value of the other variable. ] ] ??? Other available options ??? --- # Fisher’s Exact Test Fisher’s exact test is used when the sample is small. The hypotheses of the Fisher’s exact test are the same than for the Chi-square test. -- ### assumptions - The observations must be independent in order for the Fisher’s exact test to be valid --- # Fisher’s Exact Test in R .panelset[ .panel[.panel-name[Data] ``` r demo_data <- data.frame( "NonSmoker" = c(7, 0), "Smoker" = c(2, 5), row.names = c("Athlete", "NonAthlete"), stringsAsFactors = FALSE ) demo_data ``` ``` ## NonSmoker Smoker ## Athlete 7 2 ## NonAthlete 0 5 ``` ] .panel[.panel-name[Visualize] ``` r mosaicplot(demo_data, main = "Mosaic plot", color = TRUE ) ``` ] .panel[.panel-name[Plot] <img src="Day2_R-Stats_files/figure-html/fisher-s-exact_Plot-1.png" width="40%" /> ] .panel[.panel-name[Fisher’s exact test] ``` r fisher.test(demo_data) ``` ``` ## ## Fisher's Exact Test for Count Data ## ## data: demo_data ## p-value = 0.02098 ## alternative hypothesis: true odds ratio is not equal to 1 ## 95 percent confidence interval: ## 1.449481 Inf ## sample estimates: ## odds ratio ## Inf ``` ] .panel[.panel-name[Interpretation] From the output we see that the p-value is less than the significance level of 5%. Like any other statistical test, if the p-value is less than the significance level, we can reject the null hypothesis. In our context, rejecting the null hypothesis for the Fisher’s exact test of independence means that there is a significant relationship between the two categorical variables (smoking habits and being an athlete or not). Therefore, knowing the value of one variable helps to predict the value of the other variable. ] ] --- class: inverse, center, middle # Inferential Statistics - Part 2 --- # Dataset to be explored .panelset[ .panel[.panel-name[Data] ``` r library(tidyverse) library(ggpubr) load("../data/statistics_data.RData") dataset ``` ``` ## # A tibble: 391 × 7 ## IQ parental_education income schooling_decision IQ_after_1y group ## <dbl> <fct> <dbl> <fct> <dbl> <fct> ## 1 436 low 114 highschool 439 low|highschool ## 2 320 low 101 highschool 341 low|highschool ## 3 415 low 183 highschool 433 low|highschool ## 4 497 low 174 highschool 501 low|highschool ## 5 366 low 155 highschool 382 low|highschool ## 6 458 low 224 highschool 474 low|highschool ## 7 433 low 148 highschool 452 low|highschool ## 8 417 low 221 highschool 425 low|highschool ## 9 361 low 160 highschool 372 low|highschool ## 10 496 low 130 highschool 506 low|highschool ## # ℹ 381 more rows ## # ℹ 1 more variable: plot_color <chr> ``` ] .panel[.panel-name[Visualize (ungrouped)] ``` r ggplot(dataset, aes(x=income, y=IQ) ) + geom_point(size=3) + theme_bw() ``` ] .panel[.panel-name[Plot (ungrouped)] <img src="../images/ungrouped.png" width="40%" /> ] .panel[.panel-name[Interpretation] There is not much of a relationship between parental income and child's measured IQ, except for a slight clumping in certain areas. ] ] --- # Dataset to be explored .panelset[ .panel[.panel-name[Data] ``` r dataset ``` ``` ## # A tibble: 391 × 7 ## IQ parental_education income schooling_decision IQ_after_1y group ## <dbl> <fct> <dbl> <fct> <dbl> <fct> ## 1 436 low 114 highschool 439 low|highschool ## 2 320 low 101 highschool 341 low|highschool ## 3 415 low 183 highschool 433 low|highschool ## 4 497 low 174 highschool 501 low|highschool ## 5 366 low 155 highschool 382 low|highschool ## 6 458 low 224 highschool 474 low|highschool ## 7 433 low 148 highschool 452 low|highschool ## 8 417 low 221 highschool 425 low|highschool ## 9 361 low 160 highschool 372 low|highschool ## 10 496 low 130 highschool 506 low|highschool ## # ℹ 381 more rows ## # ℹ 1 more variable: plot_color <chr> ``` ] .panel[.panel-name[Visualize (groups: parental education)] ``` r ggplot(dataset, aes(x=income, y=IQ, color = parental_education) ) + geom_point(size=3) + scale_color_manual(values = c(low="blue", high="yellow" ) )+ theme_bw() ## white background ``` ] .panel[.panel-name[Plot (groups: parental education)] <img src="../images/parental_education.png" width="40%" /> ] .panel[.panel-name[Interpretation] **Parental_education is very strongly related to income**. The **most likely explanation** here is that **it is the education which determines the income**. There is hardly any relation with child's measured IQ, except for one observation: there is a group of children whose parents had a higher education but have low income: All of these children have a high IQ. This may hint at the importance of role models: in any social setting, if the parents are interested in learning, this may encourage the child to train his or her mental abilities, too. ] ] --- # Dataset to be explored .panelset[ .panel[.panel-name[Data] ``` r dataset ``` ``` ## # A tibble: 391 × 7 ## IQ parental_education income schooling_decision IQ_after_1y group ## <dbl> <fct> <dbl> <fct> <dbl> <fct> ## 1 436 low 114 highschool 439 low|highschool ## 2 320 low 101 highschool 341 low|highschool ## 3 415 low 183 highschool 433 low|highschool ## 4 497 low 174 highschool 501 low|highschool ## 5 366 low 155 highschool 382 low|highschool ## 6 458 low 224 highschool 474 low|highschool ## 7 433 low 148 highschool 452 low|highschool ## 8 417 low 221 highschool 425 low|highschool ## 9 361 low 160 highschool 372 low|highschool ## 10 496 low 130 highschool 506 low|highschool ## # ℹ 381 more rows ## # ℹ 1 more variable: plot_color <chr> ``` ] .panel[.panel-name[Visualize (groups: schooling_decision)] ``` r ggplot(dataset, aes(x=income, y=IQ, color = schooling_decision) ) + geom_point(size=3) + scale_color_manual(values = c(secondary="blue", highschool="yellow" ) )+ theme_bw() ## white background ``` ] .panel[.panel-name[Plot (groups: schooling_decision)] <img src="../images/schooling_decision.png" width="40%" /> ] .panel[.panel-name[Interpretation] **Schooling decision is very strongly related to IQ**, as it is expected if the decision is informed by the result of the IQ test. **Note that for low-income parents, there is a tendency to send their children to secondary school even at quite high IQ values**. We have seen that low income typically goes with not having had a higher education. This hints at the **role of barriers**: Parents tend to keep their children in their own social sphere. ] ] --- # Dataset to be explored .panelset[ .panel[.panel-name[Data] ``` r dataset ``` ``` ## # A tibble: 391 × 7 ## IQ parental_education income schooling_decision IQ_after_1y group ## <dbl> <fct> <dbl> <fct> <dbl> <fct> ## 1 436 low 114 highschool 439 low|highschool ## 2 320 low 101 highschool 341 low|highschool ## 3 415 low 183 highschool 433 low|highschool ## 4 497 low 174 highschool 501 low|highschool ## 5 366 low 155 highschool 382 low|highschool ## 6 458 low 224 highschool 474 low|highschool ## 7 433 low 148 highschool 452 low|highschool ## 8 417 low 221 highschool 425 low|highschool ## 9 361 low 160 highschool 372 low|highschool ## 10 496 low 130 highschool 506 low|highschool ## # ℹ 381 more rows ## # ℹ 1 more variable: plot_color <chr> ``` ] .panel[.panel-name[Visualize (groups: crossed)] ``` r ggplot(dataset, aes(x=income, y=IQ, color = group) ) + geom_point(size=3) + scale_color_manual(values = c(`low|secondary`="lightblue", `low|highschool`="blue", `high|secondary`="orange", `high|highschool`="yellow")) + theme_bw() ## white background ``` ] .panel[.panel-name[Plot (groups: crossed)] <img src="../images/crossed.png" width="40%" /> ] .panel[.panel-name[Interpretation] .pull-left[ <img src="../images/crossed.png" width="85%" /> ] .pull-right[ Coloring by both grouping factors at once, it becomes obvious that **this dataset falls into quite sharply separated groups**. In general, **secondary school is the default decision for parents without higher education, while highschool is the default for parents who attended highschool themselves**. ] ] ] --- # Recapitulation: Chi-squared test .panelset[ .panel[.panel-name[Data] ``` r ## How many data points do we have in each group? crosstab <- table(dataset$parental_education, dataset$schooling_decision) crosstab ``` ``` ## ## secondary highschool ## low 119 77 ## high 72 123 ``` ] .panel[.panel-name[Expected Counts] ``` r ## outer(x1,x2) returns a matrix: ## x1[1] * x2[1] x1[1] * x2[2] ## x1[2] * x2[1] x2[2] * x2[2] outer(rowSums(crosstab), colSums(crosstab) ) / sum(crosstab) ``` ``` ## secondary highschool ## low 95.74425 100.25575 ## high 95.25575 99.74425 ``` ] .panel[.panel-name[Chi-squared test] ``` r chisq.test(crosstab) ``` ``` ## ## Pearson's Chi-squared test with Yates' continuity correction ## ## data: crosstab ## X-squared = 21.201, df = 1, p-value = 4.135e-06 ``` ] .panel[.panel-name[Interpretation] There is a clear preference for lowly educated parents to select secondary school for their child, and for highly educated parents to select highschool. Given that there is no very obvious dependence of child's IQ on the education of the parents, this again points to the role of barriers and to the tendency to keep children in one's own social sphere. ] ] --- # Comparing Group Means: Concepts .panelset[ .panel[.panel-name[ANOVA: Are all means equal?] <img src="../images/anova3.png" width="80%" /> ] .panel[.panel-name[One-Sample t-Test] <img src="../images/onesample_t.png" width="75%" /> ] .panel[.panel-name[Independent Samples t-Test] <img src="../images/indep_t.png" width="75%" /> ] .panel[.panel-name[Paired Samples t-Test] <img src="../images/paired_t.png" width="75%" /> ] ] --- # Comparing Group Means: Assumptions <img src="../images/assumptions.png" width="80%" /> --- # Comparing Group Means: Workflow <img src="../images/anova_ttest_flowchart.png" width="80%" /> --- # Do the Assumptions Hold? .panelset[ .panel[.panel-name[Normality of IQ: Q-Q Plot] .pull-left[ <img src="../images/qqIQ.png" width="85%" /> ] <br> ``` r ggqqplot(dataset,"IQ", facet.by="group", ylab = "IQ") ``` <br> The quantiles of the IQ distribution fall reasonably within the 95% confidence band, as they should (with the exception of high|highschool) at the extreme ends). ] .panel[.panel-name[Q-Q Plot Concept] .pull-left[ <img src="../images/qqIQ.png" width="85%" /> ] <br> - **x-axis** = theoretical quantiles of the Standard Normal distribution - **y-axis** of the line = quantiles of a sample [?of size equal to our sample?] from the Standard Normal distribution - **quantile** = a value q, such that q% of the values of a distribution are <= q - **y-axis** of the points: our sampled values - **confidence band**: taking the sampling error into account, 95% of the reference Standard Normal sample is within this regionthe sampling error of the line's y-values ] .panel[.panel-name[Normality of IQ: Tests (Code) ] ``` r shapiro_results <- list() for(this_group in levels(dataset$group)) { IQ_data <- dataset |> dplyr::filter(group == this_group) |> dplyr::pull(IQ) ## base R notation: ## IQ_data <- dataset$IQ[data$group == this_group] res <- shapiro.test(IQ_data) shapiro_results[["IQ"]][[this_group]] <- res } ``` ] .panel[.panel-name[Normality of IQ: Tests (Results)] .pull-left[ ``` ## $`low|secondary` ## ## Shapiro-Wilk normality test ## ## data: IQ_data ## W = 0.98569, p-value = 0.2419 ## ## ## $`low|highschool` ## ## Shapiro-Wilk normality test ## ## data: IQ_data ## W = 0.97457, p-value = 0.1251 ``` ] .pull-right[ ``` ## $`high|secondary` ## ## Shapiro-Wilk normality test ## ## data: IQ_data ## W = 0.98741, p-value = 0.6933 ## ## ## $`high|highschool` ## ## Shapiro-Wilk normality test ## ## data: IQ_data ## W = 0.97221, p-value = 0.01211 ``` ] ] ] --- # Do the Assumptions Hold? .panelset[ .panel[.panel-name[Normality of Income: Q-Q Plot] .pull-left[ <img src="../images/qqIncome.png" width="85%" /> ] <br> ``` r ggqqplot(dataset,"income", facet.by="group", ylab = "income") ``` <br> As with IQ, the income values are reasonably within the 95% confidence bands. ] .panel[.panel-name[Normality of Income: Tests] ``` r for(this_group in levels(dataset$group)) { income_data <- dataset |> dplyr::filter(group == this_group) |> dplyr::pull(income) ## base R notation: ## income_data <- dataset$income[data$group == this_group] res <- shapiro.test(income_data) shapiro_results[["income"]][[this_group]] <- res } ``` ] .panel[.panel-name[Normality of Income: Tests (Results)] .pull-left[ ``` ## $`low|secondary` ## ## Shapiro-Wilk normality test ## ## data: income_data ## W = 0.98008, p-value = 0.07472 ## ## ## $`low|highschool` ## ## Shapiro-Wilk normality test ## ## data: income_data ## W = 0.97528, p-value = 0.1385 ``` ] .pull-right[ ``` ## $`high|secondary` ## ## Shapiro-Wilk normality test ## ## data: income_data ## W = 0.97839, p-value = 0.251 ## ## ## $`high|highschool` ## ## Shapiro-Wilk normality test ## ## data: income_data ## W = 0.98018, p-value = 0.06745 ``` ] ] ] --- # Do the Assumptions Hold? .panelset[ .panel[.panel-name[Equal Variance in IQ] ``` r car::leveneTest(y = IQ ~ group, data = dataset) ``` ``` ## Levene's Test for Homogeneity of Variance (center = median) ## Df F value Pr(>F) ## group 3 8.9392 9.711e-06 *** ## 387 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` ] .panel[.panel-name[Equal Variance in Income] ``` r car::leveneTest(y = income ~ group, data = dataset) ``` ``` ## Levene's Test for Homogeneity of Variance (center = median) ## Df F value Pr(>F) ## group 3 9.1416 7.384e-06 *** ## 387 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` ] ] --- # Pairwise Equality of Means Tests (Code) .panelset[ .panel[.panel-name[Prepare Pairwise Comparisons] .pull-left[ .mediumtiny-code[ ``` r ## construct a comparison matrix ## function combn creates all subsets of a set comparisons <- combn( levels(dataset$group), ## the set to choose from 2 ## we want subsets of size 2 ) ## construct column names for the resulting matrix: colnames(comparisons) <- apply(comparisons, 2, ## traverse by column function(x) { ## x takes the value of the current column ## construct and return the name paste0(x[1],"_vs_",x[2]) } ) ``` ] ] .pull-right[ .verytiny-code[ ``` r comparisons ``` ``` ## low|secondary_vs_low|highschool low|secondary_vs_high|secondary ## [1,] "low|secondary" "low|secondary" ## [2,] "low|highschool" "high|secondary" ## low|secondary_vs_high|highschool low|highschool_vs_high|secondary ## [1,] "low|secondary" "low|highschool" ## [2,] "high|highschool" "high|secondary" ## low|highschool_vs_high|highschool high|secondary_vs_high|highschool ## [1,] "low|highschool" "high|secondary" ## [2,] "high|highschool" "high|highschool" ``` ] ] ] .panel[.panel-name[Run t.test on IQ (Code)] ``` r t_results_IQ <- apply(comparisons, ## a matrix, where each column ## holds the names of the two ## groups to be compared 2, ## traverse by column function(this_cmp) { IQ_1 <- dataset |> filter(group == this_cmp[1]) |> dplyr::pull(IQ) IQ_2 <- dataset |> filter(group == this_cmp[2]) |> dplyr::pull(IQ) t.test( x = IQ_1, y = IQ_2, var.equal=FALSE ) }) ``` ] .panel[.panel-name[Run t.test on income (Code)] ``` r t_results_income <- apply(comparisons, ## a matrix, where each column ## holds the names of the two ## groups to be compared 2, ## traverse by column function(this_cmp) { income_1 <- dataset |> filter(group == this_cmp[1]) |> pull(income) income_2 <- dataset |> filter(group == this_cmp[2]) |> pull(income) t.test( x = income_1, y = income_2, var.equal=FALSE ) }) ``` ] ] --- # Pairwise t-Test Results for IQ .panelset[ .panel[.panel-name[Results 1] ``` ## $`low|secondary_vs_low|highschool` ## ## Welch Two Sample t-test ## ## data: IQ_1 and IQ_2 ## t = -18.583, df = 193.16, p-value < 2.2e-16 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## -177.8396 -143.7112 ## sample estimates: ## mean of x mean of y ## 268.3025 429.0779 ``` ] .panel[.panel-name[Results 2] ``` ## $`low|secondary_vs_high|secondary` ## ## Welch Two Sample t-test ## ## data: IQ_1 and IQ_2 ## t = 6.2337, df = 188.14, p-value = 2.925e-09 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## 36.37803 70.06034 ## sample estimates: ## mean of x mean of y ## 268.3025 215.0833 ``` ] .panel[.panel-name[Results 3] ``` ## $`low|secondary_vs_high|highschool` ## ## Welch Two Sample t-test ## ## data: IQ_1 and IQ_2 ## t = -17.151, df = 233.08, p-value < 2.2e-16 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## -165.1896 -131.1484 ## sample estimates: ## mean of x mean of y ## 268.3025 416.4715 ``` ] .panel[.panel-name[Results 4] ``` ## $`low|highschool_vs_high|secondary` ## ## Welch Two Sample t-test ## ## data: IQ_1 and IQ_2 ## t = 27.29, df = 147, p-value < 2.2e-16 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## 198.4978 229.4914 ## sample estimates: ## mean of x mean of y ## 429.0779 215.0833 ``` ] .panel[.panel-name[Results 5] ``` ## $`low|highschool_vs_high|highschool` ## ## Welch Two Sample t-test ## ## data: IQ_1 and IQ_2 ## t = 1.5853, df = 187.01, p-value = 0.1146 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## -3.081246 28.294001 ## sample estimates: ## mean of x mean of y ## 429.0779 416.4715 ``` ] .panel[.panel-name[Results 6] ``` ## $`high|secondary_vs_high|highschool` ## ## Welch Two Sample t-test ## ## data: IQ_1 and IQ_2 ## t = -25.728, df = 182.12, p-value < 2.2e-16 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## -216.8324 -185.9440 ## sample estimates: ## mean of x mean of y ## 215.0833 416.4715 ``` ] .panel[.panel-name[Interpretation] **Comparisons involving different school types are highly significant as expected**, because the schooling_decision had been based on the IQ value to a large part. **Within a given school type, there is a significant difference between the mean IQ values of children sent to secondary school by lowly versus highly educated parents (Results 2)**, but no significant difference for children sent to highschool by lowly versus highly educated parents (Results 5). This again hints at a barrier effect: lowly educated parents consider highschool only at really high IQ values. ] ] --- # Pairwise Tests t-Test Results Income .panelset[ .panel[.panel-name[Results 1] ``` ## $`low|secondary_vs_low|highschool` ## ## Welch Two Sample t-test ## ## data: income_1 and income_2 ## t = -0.051933, df = 176.33, p-value = 0.9586 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## -15.28467 14.50086 ## sample estimates: ## mean of x mean of y ## 156.9328 157.3247 ``` ] .panel[.panel-name[Results 2] ``` ## $`low|secondary_vs_high|secondary` ## ## Welch Two Sample t-test ## ## data: income_1 and income_2 ## t = -26.225, df = 145.87, p-value < 2.2e-16 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## -238.5932 -205.1524 ## sample estimates: ## mean of x mean of y ## 156.9328 378.8056 ``` ] .panel[.panel-name[Results 3] ``` ## $`low|secondary_vs_high|highschool` ## ## Welch Two Sample t-test ## ## data: income_1 and income_2 ## t = -20.654, df = 216.49, p-value < 2.2e-16 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## -201.1512 -166.1052 ## sample estimates: ## mean of x mean of y ## 156.9328 340.5610 ``` ] .panel[.panel-name[Results 4] ``` ## $`low|highschool_vs_high|secondary` ## ## Welch Two Sample t-test ## ## data: income_1 and income_2 ## t = -25.266, df = 139.95, p-value < 2.2e-16 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## -238.8119 -204.1499 ## sample estimates: ## mean of x mean of y ## 157.3247 378.8056 ``` ] .panel[.panel-name[Results 5] ``` ## $`low|highschool_vs_high|highschool` ## ## Welch Two Sample t-test ## ## data: income_1 and income_2 ## t = -19.956, df = 197.82, p-value < 2.2e-16 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## -201.3434 -165.1292 ## sample estimates: ## mean of x mean of y ## 157.3247 340.5610 ``` ] .panel[.panel-name[Results 6] ``` ## $`high|secondary_vs_high|highschool` ## ## Welch Two Sample t-test ## ## data: income_1 and income_2 ## t = 3.845, df = 185.88, p-value = 0.0001655 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## 18.62165 57.86751 ## sample estimates: ## mean of x mean of y ## 378.8056 340.5610 ``` ] .panel[.panel-name[Interpretation] As expected, **any comparison involving highly versus lowly educated parents is highly significant**, because education has a large influence on income. Results 1 and 6 compare the incomes of parents at the same education level, but with different schooling decisions. While for lowly educated parents (Results 1) income is uncorrelated with schooling_decision, **highly educated parents tend to have a slightly higher income if they send their child to secondary school rather than to highschool (p=0.0002)** -- a somewhat unexpected result. ] ] --- # Pairwise Tests with ggboxplot (IQ) .panelset[ .panel[.panel-name[Code] ``` r p <- ggboxplot(dataset, x = "group", y = "IQ", color = "group", palette = c("lightblue", "blue","orange", "yellow"), order = levels(dataset$group), ylab = "IQ", xlab = "group") + stat_compare_means(method = "t.test", method.args = list(var.equal = FALSE), ## The "comparisons" argument expects a list consisting ## of vectors of length 2, i.e. ## a list of the columns of our "comparisons" matrix. ## Trick to do the conversion: ## First convert the matrix to a data.frame, which is implicitly a list, ## then convert the data.frame to an explicit list: comparisons = comparisons|> as.data.frame() |> as.list() ) invisible(NULL) ## trick to prevent plotting the figure already here ``` ] .panel[.panel-name[Plot] .pull-left[ <img src="../images/ggbpIQ.png" width="80%" /> ] .pull-right[ The visual representation makes the points noted above more clear: - in comparisons involving different schooling decisions, IQ differs with high significance - in the two comparisons with the same schooling decision but different parental_education values + the IQ differences are generally much smaller + the difference is nevertheless significant for parents with low education The last observation may suggest that these parents consider highschool rather than secondary school only for very smart children (barrier effect). ] ] ] --- # Pairwise Tests with ggboxplot (income) .panelset[ .panel[.panel-name[Code] ``` r p <- ggboxplot(dataset, x = "group", y = "income", color = "group", palette = c("lightblue", "blue","orange", "yellow"), order = levels(dataset$group), ylab = "income", xlab = "group") + stat_compare_means(method = "t.test", method.args = list(var.equal = FALSE), ## The "comparisons" argument expects a list consisting ## of vectors of length 2, i.e. ## a list of the columns of our "comparisons" matrix. ## Trick to do the conversion: ## First convert the matrix to a data.frame, which is implicitly a list, ## then convert the data.frame to an explicit list: comparisons = comparisons|> as.data.frame() |> as.list() ) #invisible(NULL) ## trick to prevent plotting the figure already here ``` ] .panel[.panel-name[Plot] .pull-left[ <img src="../images/ggbpIncome.png" width="80%" /> ] .pull-right[ Note as observed below: - in comparisons involving different parental education levels, income differs with high significance - of the comparisons within one parental education level, only high|secondary and high|highschool differ significantly, with the former having a bit higher income than the latter ] ] ] --- # Preparing to Compare IQ to IQ_after_1y .panelset[ .panel[.panel-name[Original Data] Next **we will do a paired t-test**, comparing the originally measured IQ value to the value measured after one year at the school chosen by the parents. The **t.test()** function compares two vectors, so our **original dataset would be structured OK** for running the test on the columns IQ and IQ_after_1y, grouped by column "group": ``` ## # A tibble: 3 × 7 ## IQ parental_education income schooling_decision IQ_after_1y group ## <dbl> <fct> <dbl> <fct> <dbl> <fct> ## 1 436 low 114 highschool 439 low|highschool ## 2 320 low 101 highschool 341 low|highschool ## 3 415 low 183 highschool 433 low|highschool ## # ℹ 1 more variable: plot_color <chr> ``` However, the **ggboxplot()** function expects a **single data vector plus a second vector identifying the groups to be compared**. This gives us the chance to do some **data wrangling**! ] .panel[.panel-name[Transformation(1)] The values of columns IQ and the IQ_after_1y will be merged in the **data vector**. A **grouping vector** will hold the name of each value's column of origin: ``` r ## Rearrange the data table: data_long <- dataset |> tidyr::pivot_longer(cols = c(IQ,IQ_after_1y), names_to = "measurement", values_to = "IQ_value") ``` ``` ## # A tibble: 4 × 7 ## parental_education income schooling_decision group plot_color measurement ## <fct> <dbl> <fct> <fct> <chr> <chr> ## 1 low 114 highschool low|highs… blue IQ ## 2 low 114 highschool low|highs… blue IQ_after_1y ## 3 low 101 highschool low|highs… blue IQ ## 4 low 101 highschool low|highs… blue IQ_after_1y ## # ℹ 1 more variable: IQ_value <dbl> ``` ] .panel[.panel-name[Transformation(2)] **The column of origin is not enough if we want to trace each value back to the "group" column it belonged to**. A simple solution is to prepend the group names to the measurement values: ``` r data_long <- data_long |> mutate(measurement = paste(group, measurement,sep="_")) ``` .tiny-code[ ``` ## # A tibble: 6 × 7 ## parental_education income schooling_decision group plot_color measurement ## <fct> <dbl> <fct> <fct> <chr> <chr> ## 1 low 114 highschool low|highs… blue low|highsc… ## 2 low 114 highschool low|highs… blue low|highsc… ## 3 low 101 highschool low|highs… blue low|highsc… ## 4 low 101 highschool low|highs… blue low|highsc… ## 5 low 183 highschool low|highs… blue low|highsc… ## 6 low 183 highschool low|highs… blue low|highsc… ## # ℹ 1 more variable: IQ_value <dbl> ``` ] ] .panel[.panel-name[Transformation(3)] Next we make the new "measurement" column a factor, with a defined order of levels: ``` r data_long <- data_long |> mutate(measurement = factor(measurement, # set factor levels levels= c("low|secondary_IQ","low|secondary_IQ_after_1y", "low|highschool_IQ","low|highschool_IQ_after_1y", "high|secondary_IQ","high|secondary_IQ_after_1y" , "high|highschool_IQ","high|highschool_IQ_after_1y" ) )) ``` ] ] --- # Preparing to Compare IQ to IQ_after_1y .panelset[ .panel[.panel-name[Transformation(4)] **We want to compare "IQ"s and "IQ_after_1y"s within each group, but so far coloring is solely by group, not by time point**. Here we append a "4" to the colors of "IQ_after_1y" values, which makes them a bit darker. .mediumtiny-code[ ``` r data_long <- data_long |> mutate(plot_color= if_else(grepl("_after_1y$", measurement), paste0(plot_color,"4"), plot_color) ) # same in base R (a little bit simpler): # data_long$plot_color <- ifelse(grepl("_after_1y$", # measurement # ), # paste0(data$plot_color,"4"), # data$plot_color) ``` ] ] .panel[.panel-name[Long Data] .tiny-code[ ``` r data_long ``` ``` ## # A tibble: 782 × 7 ## parental_education income schooling_decision group plot_color measurement ## <fct> <dbl> <fct> <fct> <chr> <fct> ## 1 low 114 highschool low|high… blue low|highsc… ## 2 low 114 highschool low|high… blue4 low|highsc… ## 3 low 101 highschool low|high… blue low|highsc… ## 4 low 101 highschool low|high… blue4 low|highsc… ## 5 low 183 highschool low|high… blue low|highsc… ## 6 low 183 highschool low|high… blue4 low|highsc… ## 7 low 174 highschool low|high… blue low|highsc… ## 8 low 174 highschool low|high… blue4 low|highsc… ## 9 low 155 highschool low|high… blue low|highsc… ## 10 low 155 highschool low|high… blue4 low|highsc… ## # ℹ 772 more rows ## # ℹ 1 more variable: IQ_value <dbl> ``` ] ] .panel[.panel-name[Color Palette] Finally we make a color palette for the ggboxplot, associating colors (a vector) with the corresponding levels of the "measurements" factor: .mediumtiny-code[ ``` r ## find the unique pairings of (a) "plot_color" and (b) a "measurement" level -- ## In base R: d <- unique(data_long[,c("plot_color","measurement")]) ## make (b) the vector element names of (a) colors_long <- setNames(d$plot_color,d$measurement) ## In tidyverse style .. more complex: colors_long <- (data_long |> select(plot_color,measurement) |> unique() |> tibble::column_to_rownames("measurement") |> as.matrix() )[,1] ``` ] ] ] --- # Paired t-Test: IQ Change After One Year .panelset[ .panel[.panel-name[The Comparisons We Want to Make] ``` ## low|secondary_IQ_vs_low|secondary_IQ_after_1y ## [1,] "low|secondary_IQ" ## [2,] "low|secondary_IQ_after_1y" ## low|highschool_IQ_vs_low|highschool_IQ_after_1y ## [1,] "low|highschool_IQ" ## [2,] "low|highschool_IQ_after_1y" ## high|secondary_IQ_vs_high|secondary_IQ_after_1y ## [1,] "high|secondary_IQ" ## [2,] "high|secondary_IQ_after_1y" ## high|highschool_IQ_vs_high|highschool_IQ_after_1y ## [1,] "high|highschool_IQ" ## [2,] "high|highschool_IQ_after_1y" ``` ] .panel[.panel-name[Plotting Code] ``` r p <- ggboxplot(data_long, x = "measurement", y = "IQ_value", color = "measurement", palette = colors_long, order = levels(data_long$measurement), ylab = "IQ", xlab = "group") + stat_compare_means(method = "t.test", ## convert matrix columns to list elements: comparisons = pairwise_comparisons|> as.data.frame() |> as.list(), paired = TRUE ## pairwise test! ) #invisible(NULL) ## trick to prevent plotting the figure already here ``` ] .panel[.panel-name[Plot] .pull-left[ <img src="../images/ggbpIQ_change.png" width="80%" /> ] .pull-right[ The IQ measured after one year at the new school is clearly higher than IQ at schooling decision **only for children attending highschool**. This effect does not depend on parental education. ] ] ] --- # Linear Regression: Concepts .panelset[ .panel[.panel-name[Simple Linear Regression] <img src="../images/simple_regression.png" width="90%" /> ] .panel[.panel-name[Multiple Linear regression] <img src="../images/multiple_regression.png" width="80%" /> ] .panel[.panel-name[Anova/t_Test and Linear Regression] - **Both Anova/t-test and Linear Regression test hypotheses about structure in the observations**: + Anova/t-test: group structure + Linear regression: presence of predictor variables which induce non-randomness - **There is a deep mathematical relationship between the two approaches** (not to be explored here): + Regression models use the **t-statistic** to test the effect of a predictor on the dependent variable. + Regression models use The **F-statistic** known from Anova to express the overall fit of a linear model, and to test whether an additional predictor truly improves a model or should better be left out. ] ] --- # Simple LR: IQ vs Income in low|highschool .panelset[ .panel[.panel-name[Data] ``` r low_highschool <- subset(dataset, group == "low|highschool") low_highschool ``` ``` ## # A tibble: 77 × 7 ## IQ parental_education income schooling_decision IQ_after_1y group ## <dbl> <fct> <dbl> <fct> <dbl> <fct> ## 1 436 low 114 highschool 439 low|highschool ## 2 320 low 101 highschool 341 low|highschool ## 3 415 low 183 highschool 433 low|highschool ## 4 497 low 174 highschool 501 low|highschool ## 5 366 low 155 highschool 382 low|highschool ## 6 458 low 224 highschool 474 low|highschool ## 7 433 low 148 highschool 452 low|highschool ## 8 417 low 221 highschool 425 low|highschool ## 9 361 low 160 highschool 372 low|highschool ## 10 496 low 130 highschool 506 low|highschool ## # ℹ 67 more rows ## # ℹ 1 more variable: plot_color <chr> ``` ] .panel[.panel-name[Code] ``` r fit1 <- lm(IQ ~ income, low_highschool) ``` ] .panel[.panel-name[Results] .tiny-code[ ``` r summary(fit1) ``` ``` ## ## Call: ## lm(formula = IQ ~ income, data = low_highschool) ## ## Residuals: ## Min 1Q Median 3Q Max ## -92.943 -28.756 3.171 39.635 84.458 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 384.0107 18.4145 20.854 <2e-16 *** ## income 0.2865 0.1118 2.562 0.0124 * ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 47.71 on 75 degrees of freedom ## Multiple R-squared: 0.08045, Adjusted R-squared: 0.06819 ## F-statistic: 6.562 on 1 and 75 DF, p-value: 0.01243 ``` ] ] .panel[.panel-name[Interpretation] <img src="../images/fit1_summary.png" width="95%" /> ] .panel[.panel-name[Diagnostic Plots] .pull-left[ .tiny-code[ ``` r layout(matrix(1:4,nrow=2,byrow=TRUE)) plot(fit1,which=1) plot(fit1,which=2) plot(fit1,which=3) plot(fit1,which=5) layout(1) ## These four diagnostic plots are shown ## by default if you simply type plot(fit1) ## and hit return to see the next plot. ## There are 6 different diagnostic plots ## in total. They can be individually ## selected by the "which" parameter. ## Standardized residual_i = residual_i / stdError_i ## (absolute value is used on scale-location plot) ## Leverage_i = a measure of how much the ## i_th observation influences the regression line. ``` ] ] .pull-right[ <img src="Day2_R-Stats_files/figure-html/unnamed-chunk-77-1.png" width="85%" /> ] ] ] --- # Multiple LR: Role of "group: in IQ ~ income .panelset[ .panel[.panel-name[Code] ``` r fit2 <- lm(IQ ~ income + group, dataset) ``` ] .panel[.panel-name[Results] .tiny-code[ ``` r summary(fit2) ``` ``` ## ## Call: ## lm(formula = IQ ~ income + group, data = dataset) ## ## Residuals: ## Min 1Q Median 3Q Max ## -148.760 -44.441 0.023 42.070 156.675 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 275.52861 9.37653 29.385 < 2e-16 *** ## income -0.04605 0.04818 -0.956 0.33979 ## grouplow|highschool 160.79345 8.84840 18.172 < 2e-16 *** ## grouphigh|secondary -43.00289 13.99469 -3.073 0.00227 ** ## grouphigh|highschool 156.62432 11.78043 13.295 < 2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 60.5 on 386 degrees of freedom ## Multiple R-squared: 0.6845, Adjusted R-squared: 0.6812 ## F-statistic: 209.3 on 4 and 386 DF, p-value: < 2.2e-16 ``` ] ] .panel[.panel-name[Interpretation] <img src="../images/fit2_summary.png" width="90%" /> ] .panel[.panel-name[F-Statistic For Model Comparison] The F-statistic in the model summaries compares the predictive power of the model to the NULL model without any predictors, using an ANOVA framework. Function **anova()** can do this for any two models. Let us compare the fit2 model to a model on the full data with income as the only predictor: ``` r fit0 <- lm(IQ ~ income, dataset) ## now not restricted to one group level! anova(fit0, fit2) ``` ``` ## Analysis of Variance Table ## ## Model 1: IQ ~ income ## Model 2: IQ ~ income + group ## Res.Df RSS Df Sum of Sq F Pr(>F) ## 1 389 4469257 ## 2 386 1412851 3 3056406 278.34 < 2.2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` As expected, including "group" improves the model greatly. ] .panel[.panel-name[Diagnostic Plots] .pull-left[ .tiny-code[ ``` r layout(matrix(1:4,nrow=2,byrow=TRUE)) plot(fit2,which=1) plot(fit2,which=2) plot(fit2,which=3) plot(fit2,which=5) layout(1) ## Because the regression is now actually done in 3D space, the diagnostic 2D plots are no longer that easy to interpret. What is important is the normality of the residuals (plot 2) and an approximately horizontal red line in the last two plots. ``` ] ] .pull-right[ <img src="Day2_R-Stats_files/figure-html/unnamed-chunk-83-1.png" width="85%" /> ] ] ] --- # We Are Done --- Except for a Confession ... ---- --- # We Are Done --- Except for a Confession ... ---- ## We found a lot of structure in this dataset, didn't we? ### Structure can have the most unexpected causes ... --- # We Are Done --- Except for a Confession ... ---- <img src="../images/gorilla.png" width="90%" /> --- # We Are Done --- Except for a Confession ... ---- #### You may be interested in reading this paper and the follow-up papers discussing it: <img src="../images/yanai_lercher2020_abstract2.png" width="75%" /> --- class: inverse, center, middle # Session 3: Principal Component Analysis & Hierarchical Clustering --- ## Principal Component Analysis (PCA) - Overview .panelset[ .panel[.panel-name[What is PCA?] .pull-left[ **Principal Component Analysis (PCA)** is a **linear** dimensionality reduction technique that transforms high-dimensional data into a lower-dimensional space by finding **new, uncorrelated axes** (called **principal components**) that **maximize the variance** in the data. - **Dimensionality Reduction**: PCA sets the focus on just a few components and their corresponding genes, which contribute the most to the variation in the data. - **Applications**: PCA is widely used in gene expression data (e.g., RNA-Seq) to identify patterns, reduce noise, and cluster samples based on similar gene expression profiles. ] .pull-right[ <div class="image-with-caption"> <img src="images/pca_plot.png" alt="PCA Plot" width="100%"> <p class="caption"><font size="3"><b>source:</b> PMID: 34440590 </font></p> </div> ] ] .panel[.panel-name[Applications of PCA] .pull-left[ - **Data Visualization** - Reduce high-dimensional data to 2D/3D for easy interpretation - Capture main sources of variation - **Batch Effect Detection** - Detect (and remove technical) variability between experiments - Improve biological signal clarity - **Population Genetics** - Visualize genetic diversity and ancestry - Identify population structure and relationships ] .pull-right[ - **Noise Reduction & Preprocessing** - Discard irrelevant data and noise - Clean and compress data for further analysis (e.g., clustering) - **Feature Selection** - Identify the most informative genes or variables - Focus on principal components that capture the most variance - **Gene Expression Analysis** - Uncover patterns in large datasets (RNA-Seq, microarrays) - Cluster samples by expression profiles (e.g., healthy vs. diseased) ] ] .panel[.panel-name[Steps in PCA] .pull-left[ 1. **Standardize the Data**: - Biological data may vary in scale (e.g., gene expression values), so data should be standardized (mean = 0, variance = 1). 2. **Compute Covariance Matrix**: - Determine how the variables (genes) relate to each other. 3. **Eigenvectors and Eigenvalues**: - **Eigenvectors** are the directions of the maximum variance (the PCs). - **Eigenvalues** tell us how much of the variance each PC explains. 4. **Select Principal Components**: - Choose the number of PCs to retain, usually based on the total variance explained (e.g., 90% of variance). ] .pull-right[ <div class="image-with-caption"> <img src="images/pca_scree_plot.png" alt="Scree Plot" width="100%"> <p class="caption"><font size="3"><b>source:</b> Iris dataset </font></p> </div> ] ] .panel[.panel-name[Interpreting PCA] .pull-left[ - **Principal Components**: The first few PCs capture the majority of the variance in the data. - **Loadings**: Each PC is a linear combination of the original variables (genes). The loadings tell us which genes contribute most to each PC. - **Biological Meaning**: - PC1 might represent major biological differences (e.g., healthy vs. diseased). - PC2 could capture subtler differences. - PC1 is sometime not Component of interest (!!). ] .pull-right[ <div class="image-with-caption"> <img src="images/pca_loading_plot_seurat.png" alt="PCA Loading Plot" width="90%"> <p class="caption"><font size="3"><b>source:</b> https://satijalab.org/seurat/articles/pbmc3k_tutorial.html </font></p> </div> ] ] ] --- ## Principal component analysis in R .panelset[ .panel[.panel-name[Data] ``` r ## Load and display the data data("iris") head(iris) ``` ``` ## Sepal.Length Sepal.Width Petal.Length Petal.Width Species ## 1 5.1 3.5 1.4 0.2 setosa ## 2 4.9 3.0 1.4 0.2 setosa ## 3 4.7 3.2 1.3 0.2 setosa ## 4 4.6 3.1 1.5 0.2 setosa ## 5 5.0 3.6 1.4 0.2 setosa ## 6 5.4 3.9 1.7 0.4 setosa ``` We will use the **iris** dataset, which contains measurements of four features (Sepal Length, Sepal Width, Petal Length, Petal Width) for three species of flowers. ] .panel[.panel-name[Perform PCA] ``` r ## Perform PCA on the iris dataset pca_result <- prcomp(iris[, -5], scale. = TRUE) summary(pca_result) ``` ``` ## Importance of components: ## PC1 PC2 PC3 PC4 ## Standard deviation 1.7084 0.9560 0.38309 0.14393 ## Proportion of Variance 0.7296 0.2285 0.03669 0.00518 ## Cumulative Proportion 0.7296 0.9581 0.99482 1.00000 ``` We perform **Principal Component Analysis (PCA)** on the **iris** dataset, excluding the species column. PCA helps reduce the dataset's dimensionality while retaining most of its variance. ] .panel[.panel-name[Scatterplot (PC1 vs PC2)] .pull-left[ ``` r pca_scores <- as.data.frame(pca_result$x) ggplot(pca_scores, aes(PC1, PC2, color = iris$Species)) + geom_point(size = 3) + ggtitle("PCA Scatterplot (PC1 vs PC2)") + xlab( paste("PC1 (", round( summary(pca_result)$importance[2,1] * 100, 1), "% variance)")) + ylab( paste("PC2 (", round( summary(pca_result)$importance[2,2] * 100, 1), "% variance)")) + theme_minimal() ``` ] .pull-right[ <!-- --> ] This scatterplot shows how samples (flower species) cluster based on the first two principal components. **PC1** explains the most variance, followed by **PC2**. ] .panel[.panel-name[Variance Explained] .pull-left[ ``` r ## Calculate explained variance eigenvalues <- pca_result$sdev^2 explained_variance <- eigenvalues / sum(eigenvalues) cumulative_variance <- cumsum(explained_variance) # Plot explained variance explained_variance_df <- data.frame( PC = factor(paste0("PC", 1:4), levels = paste0("PC", 1:4)), Proportion = explained_variance ) ggplot(explained_variance_df, aes(x = PC, y = Proportion)) + geom_bar(stat = "identity", fill = "steelblue") + ggtitle("Proportion of Variance Explained") + xlab("Principal Component") + ylab("Proportion of Variance") + theme_minimal() ``` ] .pull-right[ <!-- --> ] The **Proportion of Variance Explained** plot shows how much variance is captured by each principal component. ] .panel[.panel-name[Scree Plot] .pull-left[ ``` r ## Scree plot showing cumulative variance explained cumulative_variance_df <- data.frame( PC = 1:4, CumulativeVariance = cumulative_variance ) ggplot(cumulative_variance_df, aes(x = PC, y = CumulativeVariance)) + geom_line(group = 1, color = "steelblue") + geom_point(size = 3, color = "steelblue") + ggtitle("Cumulative Variance Explained") + xlab("Principal Component") + ylab("Cumulative Variance") + scale_y_continuous(limits = c(0, 1)) + theme_minimal() ``` ] .pull-right[ <!-- --> ] This **Scree Plot** helps determining how many PCs are needed to explain most of the data’s variance. ] ] --- ## Hierarchical Clustering - Overview .panelset[ .panel[.panel-name[What?] .pull-left[ **Hierarchical Clustering** is an **unsupervised** machine learning technique used to group data into clusters based on similarity, creating a hierarchy of clusters. It builds a **tree-like structure** (dendrogram) that represents nested clusters at various levels. - **Agglomerative Method**: Each data point starts as its own cluster, and pairs of clusters are merged based on their similarity until one large cluster forms. - **Divisive Method**: The reverse process, where one large cluster is split recursively into smaller clusters. ] .pull-right[ <div class="image-with-caption"> <img src="../images/dendrogram.png" alt="Dendrogram Example" width="100%"> <p class="caption"><font size="3"><b>source:</b> https://www.gastonsanchez.com/visually-enforced/how-to/2012/10/03/Dendrograms/ </font></p> </div> ] ] .panel[.panel-name[Applications] .pull-left[ - **Gene Expression Analysis** - Group genes or samples based on expression profiles - Identify clusters of similar expression patterns (e.g., healthy vs. diseased) - **Customer Segmentation** - Group customers into distinct clusters based on purchasing behavior - Find patterns in customer demographics and preferences - **Document Clustering** - Group similar documents based on content similarity - Useful in text mining and information retrieval ] .pull-right[ - **Image Segmentation** - Cluster similar pixels in an image - Separate objects or regions based on color or intensity - **Pattern Recognition** - Group data points with similar characteristics - Useful in applications like facial recognition or biometrics - **Marketing Analysis** - Understand market segments and target specific groups of customers - Create personalized marketing strategies based on clustering ] ] .panel[.panel-name[Algorithm] .pull-left[ 1. **Calculate Distance Matrix**: - Measure the distance (e.g., Euclidean) between every pair of data points. 2. **Choose a Linkage Method**: - **Single Linkage**: Merge clusters based on the closest pair of points. - **Complete Linkage**: Merge based on the farthest pair of points. - **Average Linkage**: Merge based on the average distance between points. 3. **Build the Dendrogram**: - Recursively merge clusters and represent the process in a tree structure. 4. **Cut the Dendrogram**: - Choose a threshold to determine the number of clusters. ] .pull-right[ <div class="image-with-caption"> <img src="../images/hclust_dendrogram.png" alt="Dendrogram Hierarchical Clustering" width="100%"> <p class="caption"><font size="3"><b>source:</b> https://www.datanovia.com/en/lessons/examples-of-dendrograms-visualization/ </font></p> </div> ] ] .panel[.panel-name[Interpretation] .pull-left[ - **Dendrogram**: A tree-like diagram that shows the sequence of cluster merges. The height at which two clusters are joined represents their similarity (shorter links indicate more similar clusters). - **Clusters**: You can choose how many clusters to create by cutting the dendrogram at a certain height. - **Biological Meaning**: - Clusters can group samples or genes with similar biological characteristics. - Patterns may help in identifying disease subtypes, evolutionary relationships, or functional similarities. ] .pull-right[ <div class="image-with-caption" height="600"> <img src="../images/hclust_heatmap.png" alt="Heatmap and Dendrogram" width="90%"> <p class="caption"><font size="3"><b>source:</b> https://bioinformatics.ccr.cancer.gov/docs/data-visualization-with-r/Lesson5_intro_to_ggplot/ </font></p> </div> ] ] ] --- ## Hierarchical Clustering in R .panelset[ .panel[.panel-name[Data] ``` r ## Load and display the data data("iris") head(iris) ``` ``` ## Sepal.Length Sepal.Width Petal.Length Petal.Width Species ## 1 5.1 3.5 1.4 0.2 setosa ## 2 4.9 3.0 1.4 0.2 setosa ## 3 4.7 3.2 1.3 0.2 setosa ## 4 4.6 3.1 1.5 0.2 setosa ## 5 5.0 3.6 1.4 0.2 setosa ## 6 5.4 3.9 1.7 0.4 setosa ``` We will use the **iris** dataset, which contains measurements of four features (Sepal Length, Sepal Width, Petal Length, Petal Width) for three species of flowers. ] .panel[.panel-name[Perform Hierarchical Clustering] .pull-left[ ``` r ## Calculate the distance matrix & ## perform hierarchical clustering distance_matrix <- dist(iris[, -5]) hclust_result <- hclust(distance_matrix, method = "complete") # Plot the dendrogram plot(hclust_result, labels = iris$Species, main = "Dendrogram of Iris Dataset") ``` We perform **Hierarchical Clustering** on the **iris** dataset using the complete linkage method. The dendrogram shows how the data points are grouped. ] .pull-right[ <!-- --> ] ] .panel[.panel-name[Heatmap with Dendrogram] .pull-left[ ``` r ## Create a heatmap with hierarchical clustering library(pheatmap) # Define annotation colors for species annotation_colors <- list( Species = c(setosa = "red", versicolor = "blue", virginica = "green") ) # Perform hierarchical clustering pheatmap(as.matrix(iris[, -5]), clustering_distance_rows = "euclidean", annotation_colors = annotation_colors, clustering_method = "complete", annotation_col = iris["Species"], main = "Heatmap with Dendrogram") ``` ] .pull-right[ <!-- --> The heatmap shows the clustering of samples and features along with a dendrogram, which helps identify the groupings. ] ] .panel[.panel-name[Choosing the Number of Clusters] .pull-left[ ``` r library(dplyr) ## Cut the dendrogram to obtain clusters cutree(hclust_result, k = 3) %>% table() ``` ``` ## . ## 1 2 3 ## 50 72 28 ``` You can choose the number of clusters by cutting the dendrogram at a certain height. Here, we cut the dendrogram into **3 clusters**. The colored rectangles show the division into 3 clusters, based on the dendrogram structure. This approach allows you to select the number of clusters that best fits your data, based on the hierarchical clustering method. ] .pull-right[ ``` r ## Visualizing clusters cluster_labels <- cutree(hclust_result, k = 3) plot(hclust_result) rect.hclust(hclust_result, k = 3, border = 2:4) ``` <!-- --> ] ] ]